When it comes to live events, today’s audiences expect more personalised and immersive experiences, and technology is helping to make this possible. Real-time tools, such as virtual stage extensions, LED volumes, digital performers, and VR or AR experiences, enable producers and designers to experiment and respond to audiences in ways that were previously unachievable.

Dimension Live, a department within Dimension Studio, applies these technologies to live shows, location-based experiences, and multi-venue activations. Its work demonstrates how real-time workflows can support both creative teams and event producers, enabling them to plan and deliver more interactive experiences.

We speak with Simon Windsor, co-founder and co-CEO of Dimension Studio, Mark Bustard, executive producer, Dimension Live, and Oliver Ellmers, principal creative technology engineer at Dimension, about how the team uses real-time technology, the challenges and opportunities it offers, and what the next generation of live experiences could look like.

Dimension Live

Dimension Studio was founded 15 years ago and has built up extensive experience creating immersive experiences and integrating real-time and 3D technologies into live settings and locations.

“That spans everything from music and sport to brand experiential work, visitor attractions, and location-based entertainment,” says Windsor. “We’ve been fortunate in that time to work with some of the biggest names in the industry.”

The launch of Dimension Live extends Dimension's goal as a studio—to connect audiences with stories, worlds, and experiences in new ways.

Simon Windsor

“We have a history of bringing new technologies and innovations into the market, and Dimension Live consolidates that experience with a clear focus on the future of live entertainment, which we believe will become increasingly immersive, more personalised, and will leverage the technologies we’ve spent years developing.

“We’re excited to prove that out, and under Mark’s stewardship, Dimension Live is taking our capabilities to the next level as experiences increasingly harness these technologies.”

From live theatre to immersive experiences

While it is difficult to go into specifics while projects are in development, the diversity of sectors that Dimension Live is working in is broad, which Bustard says shows the appetite for new technologies, especially real-time:

“We’re involved in everything from live theatre shows and DJ sets to live and virtual concerts, with a lot of focus on how to deliver truly immersive experiences, using real-time technologies.

Mark Bustard

"We’re also supporting location-based experiences, so it’s a really wide-ranging portfolio.”

In terms of the services the team offers, it breaks down into a few key areas:

“First is design and consultancy: helping clients access new technologies they might not be familiar with and breaking down the barriers to entry that often make them hard to adopt. From my background as an events producer, I know how difficult it can be to embrace new tech. You never know if it will last, or what’s coming next.”

Having experts with both deep experience and foresight of what’s down the track is invaluable.

The second area is enabling creatives to work in a fully integrated design process using real-time tools like Unreal Engine.

“That means you can design and technically visualise a visitor attraction, a live show, or even a linear production all in one environment. In the past, as a producer, I’d work with multiple design teams, each with its own software, and then have to piece it all together in my head.

“Now we can provide a collaborative, high-quality working environment from the start, which there’s a clear appetite for across the industry.”

Real-time technology tools

From there, Dimension Live brings in all the creative tools it has developed through years of real-time work, like volume capture, motion capture, tracking performers in a space to deliver personalised content, digital crowds, metahumans, and virtual humans.

“Increasingly, we’re also linking into our AI pipeline to create interactive, real-time content,” adds Bustard.

“A good example is creating a digital twin of a venue, build in the technical production to mirror the real-world space, extend it into a virtual world, and then add characters or performers, tracking them to create the show. Some of this work started before I joined Dimension, with support from an Epic MegaGrant [from developer Epic Games], and it continues to evolve.

“That’s what excites me: the iterative nature of the work. Things are changing all the time, and we’re able to bring new technologies to bear in live experiences as they emerge.”

The benefits of real-time technology

The most significant advantage of real-time technology, Ellmers says, is the speed of iteration.

“Being able to work live, whether that’s in front of a screen with content creators or within the technical pipeline, and see instant feedback as you make changes, is incredibly powerful.

“In previous workflows, when you were designing content for a stage, you were often locked into your work ahead of the show without really seeing how it would play until maybe an hour before curtain.

Oliver Ellmers

"At that point, it was very difficult to make adjustments. Now, we can sit in a room and see everything happening in real time, which is a huge draw.”

The same applies to rehearsal workflows. For example, lighting designers used to program a show, hand over their production materials, and then have only a few hours on site to ensure everything was working.

“With real-time tools, they can collaborate directly with our creative teams ahead of time, visualise the show in detail, and arrive on site with everything already in place,” says Ellmers.

“For me, the key benefits are fast iteration and being able to front-load as much of the process as possible. Of course, live shows will always throw up surprises—there’s always a spanner in the works somewhere—but this approach eases a lot of nerves and allows everyone to go in far more prepared.”

Enhancing the possibilities

On the potential of real-time technology in live experiences, Windsor identifies two main areas of opportunity.

The first is inside the venue, enhancing the creative possibilities of a show: “We’re seeing radical new approaches: mixed reality, virtual venue extensions, virtual lighting, interactive and adaptive content. Collectively, these help reimagine the physical space and the nature of the show or attraction.”

The beauty of real-time rendering tools like Unreal Engine, or even generative AI used in a real-time context, is that you see the results instantly:

“When combined with technologies like computer vision or motion tracking, you can create personalised experiences that react to the audience in real time. The core show might remain the same, but its expression adapts to the audience and the data being harnessed in that moment.

“Increasingly, this also includes digital twinning, where physical experiences are paired with their virtual counterparts, something Dimension Live is designed to make more efficient for our partners.”

The second opportunity lies outside the venue: extending performances beyond a single location.

“Imagine a music artist performing in one city, and that performance being virtually interpreted in real time across nine other cities or streamed online in near real time. This opens the door to bespoke pop-up shows, where artists can experiment with creative delivery while staying true to the authenticity of the live performance.

“The same approach could connect museums or attractions, where each experience adapts based on the interactions happening at the other. In that sense, real time becomes the connective tissue linking locations.”

He foresees a future of diverse, cost-efficient live experiences supported by real-time content creation that enables non-destructive workflows, allowing assets to be reused across multiple applications.

“That creates significant savings in time and cost, while also unlocking huge creative flexibility. That’s the future we’re excited about.”

What sets Dimension Live apart?

Traditionally, it’s been difficult for the live events industry to access virtual production and film technologies.

“What we’re doing at Dimension Live is something new,” says Bustard. “We often get unfairly grouped with VFX or traditional production studios, but that’s not really what we are. We’re focused on real-time, and that makes our offer very different.

“I’d say we don’t have a direct competitor in the marketplace. There are boutique agencies that provide one element, such as motion capture, character animation, or production support, but not an integrated service that brings all of those together.

“As a producer, I always wanted that: to be able to sit in a room with experts in motion capture, character and animation, and live events, and know we were all speaking the same language. No barriers, no one throwing their work over the wall and hoping someone else could pick it up.”

That’s why Dimension Live has set out to offer truly integrated service with collaboration at its core.

“The team is used to working with partners and creatives, and they’ve adapted brilliantly from film, where they might work with directors for the screen, to live, where we’re collaborating with producers and storytellers creating shows or narrative-driven LBE experiences.

“For me, the exciting part is the ability to take visionary ideas and bring them to life using technology appropriately and creatively. That’s energised me personally, and it’s what really excites our clients. It’s what sets Dimension Live apart.”

Technology & creativity combine

This new intersection of technology and creativity unlocks more doors for creators, explains Ellmers. “They can see their work instantly, make changes in real time, and immediately see the response. That speeds up their thinking process and lightens the load in terms of design workflow.

“Instead of going back to the drawing board and starting from scratch every time, they can make creative judgments and decisions on the spot. For me, that’s the best thing about working in the real-time space—it opens up more possibilities.”

Bustard adds that Dimension Live’s toolset is broad enough that creatives can use it in many different ways:

“We are seeing a trend towards personalisation and adding depth to content. An interesting recent example was Terminal 1 at Glastonbury. The overall piece was a heavily scripted, visceral form of immersive theatre, and Ollie created an AI deepfake interactive where, as you walked through the ‘airport arrival,’ your face was captured and mapped into video content further along.

“The technology added a striking twist, but we had to balance it carefully with traditional techniques, so it felt appropriate. That balance is something producers are starting to understand more as they see how our technology can be applied.”

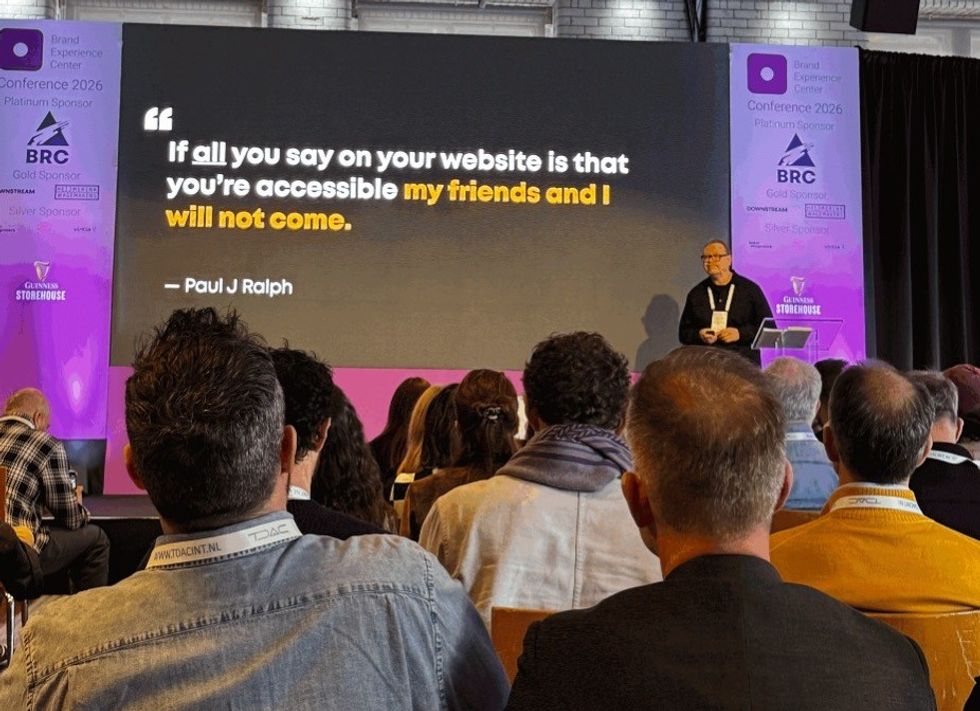

Another layer to that project was accessibility. The team shot it in 180 and put it into a VR headset for people with accessibility requirements.

“For us and for the producing team, that was a chance to ask: how can we engage a wider audience with these technologies? One part was about adding depth and a subtle narrative spin. The other was about opening the experience to people who otherwise couldn’t take part.

“Working with the Glastonbury producers and the accessibility team, we were able to add real value to the overall experience.”

Real-time technology & the future of entertainment

Looking to the future, Windsor says:

“We live in a world now where fans don’t just want to meet their heroes or watch a story unfold. They want to be actively involved. They want to immerse themselves, play a role, connect with characters, and share experiences with other audience members in a much deeper way.

“And crucially, they don’t want the experience to end when the show, ride, or performance is over. They want it to extend beyond that moment.”

That’s where real-time technologies come in, and where Dimension Live is focused: on how the next generation of multi-dimensional experiences will evolve and bleed into other forms of engagement. For example, a live music show might extend beyond one location to multiple venues or platforms, all enabled by real-time rendering and tracking.

“Looking ahead, we see real-time rendering, generative AI, and computer vision technologies at the heart of the next generation of live experiences. These tools will provide greater data accuracy, immediate creative results, and more personalised interactions informed by audience data.

“That’s the trend we’re most excited about. We’ve spent years building technology and tools under the Dimension platform, and we see them as a real advantage for our clients in delivering that vision.”

Blurring the boundaries

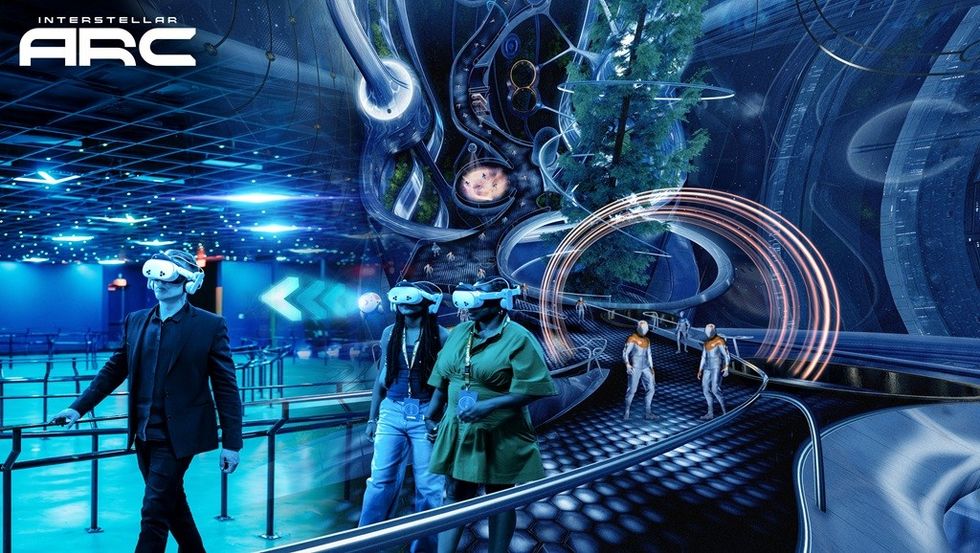

Ellmers adds that he’s excited about the extension of live experiences into different realms and worlds: “Being able to take an in-person event and expand it through wearable AR or VR into a synchronised wider world feels like the direction we’re heading—if not walking, then running. That’s where the buzz really is right now.”

For Bustard, it’s the blurring of boundaries between real-world and digital experiences:

“I’ve got two kids who go to loads of location-based entertainment, and when I ask them about their favourite experiences, one was Snoop Dogg as a boss in Fortnite. To them, there’s no distinction between that and a physical show like Gorillaz. It’s all an experience they share with friends, talk about at school, or online afterwards."

“That’s what excites me: being able to connect with audiences in the spaces they already inhabit. Right now, for example, we’re working on how our digital twin technology can live in different environments . It’s completely new frontier work; we’re creating tools and software that don’t exist yet.

“Doing something genuinely new, for the first time, is always exciting. And if it helps people get closer to the heroes and entertainment they love, then it has real longevity.”

Discover real-time technology

When asked what advice they would give to live experience curators who are exploring this kind of real-time technology for the first time, the trio are keen for people to dive in and experience the possibilities:

“My advice is simple: get in touch,” says Bustard. “If you want to use new technologies, don’t try to figure it all out alone. We’re very collaborative and happy to guide people, show them what the technology can do, and make it accessible. Misunderstanding what technology can achieve, or assuming AI is just a button you press, and it does everything for you, is a common misconception.

“The other barrier is fear. So, my advice is to jump in and engage with it.”

Windsor adds that it’s very easy these days to test ideas early:

“With real-time tools, you can experiment from the initial script or idea all the way through to final delivery or take on a specific role within that pipeline. The key is that you can test creative concepts early, adapt quickly, and learn without incurring large costs. Often, these early tests show there’s a better way to do something or open up new possibilities.

“We encourage our clients and partners to engage with real-time technology early in the process, to explore what’s possible before investing heavily in a project.”

Ellmers says: “Get out there and take the technology into the real world. Step out of the office or studio and experiment on-site. Real-time technology offers far more insight and flexibility when you can see and adjust things in the environment where they’ll actually exist.

“Getting out there and playing with it is really, really important.”

Charlotte Coates is blooloop's editor. She is from Brighton, UK and previously worked as a librarian. She has a strong interest in arts, culture and information and graduated from the University of Sussex with a degree in English Literature. Charlotte can usually be found either with her head in a book or planning her next travel adventure.

L to R: Hamish Hamiton, Adrian Pettett, and Ben Cooper

L to R: Hamish Hamiton, Adrian Pettett, and Ben Cooper Fame Factory

Fame Factory  KSA Pavilion, Expo 2025 Osaka

KSA Pavilion, Expo 2025 Osaka KSA Pavilion, Expo 2025 Osaka

KSA Pavilion, Expo 2025 Osaka Fame Factory

Fame Factory Cultural programming, KSA Pavilion at Expo 2025 Osaka

Cultural programming, KSA Pavilion at Expo 2025 Osaka Fame Factory

Fame Factory